Almost Touching the Tablet

Tuesday, January 26, 2010 at 2:55PM

Tuesday, January 26, 2010 at 2:55PM UPDATE: I got it wrong. But I think the trend is right.

Tomorrow…

Apple is rumoured to be announcing a new Tablet device. You probably know this. Rumours of it being shiny and thin (which it probably will be.) How it will be always connected to the internet, and show you books and newspapers and movies on-demand (which it probably can.) How it will have some magical new jaw-dropping interface (which it probably will have.)

But what excites me most is a possible feature that no one seems to have thought of. It's not sexy, and it's something we use everyday on our desktop machines. In fact you probably can't remember computing without it. And yet, I feel it's the key to the future of computing, and without it, the Tablet will not be able to spawn the New Age of Computing. So what's this amazing technology? I'll tell you: mouseOver. You know, the feature whereby links on a page change when you mouse over them, buttons darken and tooltips appear. The subtle interaction that lets you learn more about an interface without committing to anything as serious as a mouse click.

Of course, the Tablet is all about Multitouch -insert choirs of angels- so there's no mouse to be seen. Just a finger or three. So let's call it 'touchOver'. Imagine icons that darken, lighten and pop-out as you waver your finger over them like a tantalising box of fancy chocolates.

So, why bother to include an interaction feature from the past?

First, let's look at the existing benefits of mouseOver in desktop and web applications:

- Users feel more comfortable with unfamiliar interfaces, exploring without the commitment of clicking

- The user has feedback which helps them "aim" their cursor

Both of these are valuable. But in multi-touch interface history I see rare mention of support for the touchscreen equivalent of mouseOver. I don't know why—maybe it has been technically difficult to cleanly detect fingertip position as they hover over a touch-surface. Maybe the interaction design was never solved. Maybe I've been looking in the wrong places. Maybe it wasn't deemed necessary.

But. Fast forward to now — see a recently awarded patent to Apple…

[0095]Another potential technique for the determination between "hovering" and "touching" is to temporally model the "shadow" region (e.g., light impeded region of the display). In one embodiment, when the user is typically touching the display then the end of the shadow will typically remain stationary for a period of time, which may be used as a basis, at least in part, of "touching". In another embodiment, the shadow will typically enlarge as the pointing device approaches the display and shrinks as the pointing device recedes from the display, where the general time between enlarging and receding may be used as a basis, at least in part, of "touching"…

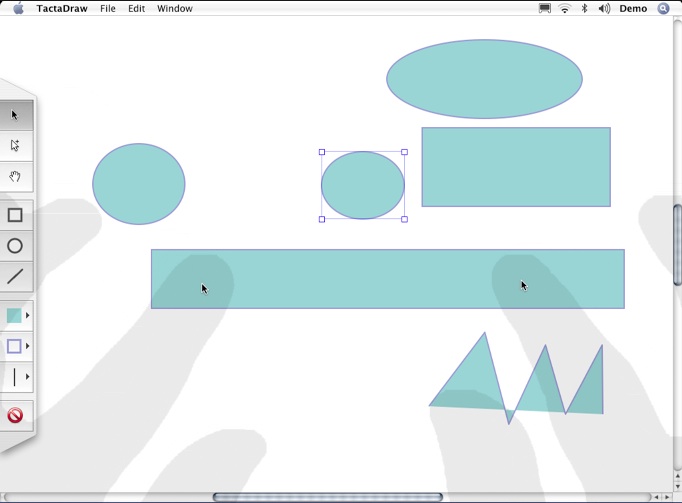

…where it seems that Apple now has the technology, the art and the desire to achieve touchOver. Their patent in essence describes an artificially drawn 'shadow' of each fingertip as it hovers over the interface. Here's a very quick mockup I made of how this may look, as applied to iPhone.

touchOver mockup from Keith Lang on Vimeo.

So why does touchOver matter so much?

First, I think this will make the touchscreen user experience even better. Less mis-tapped buttons because you have a greater sense of where the device 'thinks' your finger is. More accurate detection of taps because the device knows about your finger position even before you tap.

Secondly, and more importantly, it serves as a stepping stone to a multitouch proxy device.

What do I mean by 'proxy device'? Take the mouse for example. You can see a physical 'mirror' of the mouse on the screen at all times — the cursor —that lets you interact without looking at the physical device.

For a multitouch tablet to replace, or at least augment the mechanical keyboard and mouse, there should be a way to let you keep your eyes on the screen at all times. I know of at least one device that works in this way, the Tactapad by Tactiva (never released commercially).

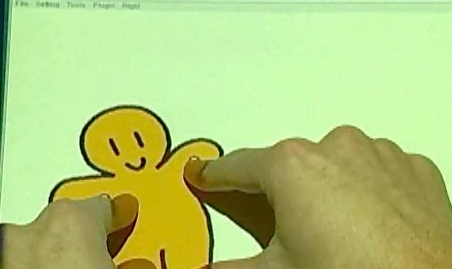

You can watch a movie of the Tactapad in action here. The tactapad uses a video camera looking down on the users hands to generate an artificial silhouette. A sufficiently advanced multitouch trackpad could generate an even more minimalist/clean version. Note: I'm not saying Apple would mimic the tool workflow as per Tactapad, simply that they'd share the idea of proxy manipulation.

The end result is the same.

A device that brings all the benefits of a dynamic multitouch interface to the desktop computing experience.

Caveats

"But touchscreens are so finicky!"

Lay your palm down on many touchscreens and it will register that incorrectly as a touch event. Other Apple patents describe logic to rule this out. In addition they boast the ability to switch between 'typing' mode (all fingers down) 'pointing mode' (one finger down) and drawing mode (three fingers down, like holding an imaginary pencil.) It may be a solvable problem.

"I'd get tired holding my fingers up all day"

Yes, you wouldn't want to hold your fingers 1cm above the desk all day long. I'm sure there is some solution. See above.

"But what about haptics/force feedback?"

Yes, haptics/force feedback may help you 'feel' your way around an interface without looking. I've been lucky enough to play with some lab-quality (read: $$$) haptic interfaces and agree that it's completely possibly to emulate the feel of pressing a phsyical button or pushing around a lump of clay. But those devices were not cheap, not light nor low-power. I'm looking forward to sophisticated haptics in out everyday devices as much as you, but in some years' time.

"I'd never give up programming on my trusty IBM mechanical clunkity-clunk keyboard."

Maybe writers and programmers will stick to using mechanical keyboards forever. Maybe we'll always keep a mechanical keyboard handy. But it will get harder to resist the appeal of a device where everything is under your fingertips… imagine, for example a Swype-like input interface that dynamically changes it's dictionary depending on what application, or even what part of a line of code, you're currently typing in. A truly context-aware device, done in an subtle and sensible way.

"Why hasn't someone done it before?"

Hehe. They said that to the Wright brothers too. Actually, I'd love to mock this up using something like Keymote for iPhone, but it's very difficult without touchOver-like functionality

And yes, Apple predictions are folly. But from my perspective it's simply a question of 'when' and 'by who'. And from my perspective, the answers are 'soon' and 'Apple'.

Past. Present. Future.

Here's the bit where I'd love your help: Have you seen any examples of touchscreen interfaces working with touchOver like capacity? How did they work? What other problems do you envision?

Is touchOver essential to a rich desktop multitouch experience? I love the fluidity of interfaces like this multi-touch puppetry (via by Bill Buxton) and think touchOver will be essential to move rich interaction like this to mainstream computing. Let me know. :)

Keith Lang |

Keith Lang |  4 Comments |

4 Comments |  Multitouch,

Multitouch,  Touch,

Touch,  apple,

apple,  interaction,

interaction,  patents in

patents in  future

future

Reader Comments (4)

I think there is the problem that your fingers hide the visual representation of the touchOver. For example, when I´m typing on the iPhone keyboard then I can´t see the letter I´m pressing. That´s why you filmed the mockup from a 45° angle. But the eyes of the user are in a ~90° angle to the screen. Of course, when you have bigger items thats no problem. But we have always a limited screen and the apps would look like these phones for old people.

Peace, andreaskern.

Thanks andreaskern,

I wasn't clear — although the Apple patent describes something like my iPhone mockup, where I think these will be needed is when you're using them as a proxy. That is, you'll see these circles etc on the monitor in front of you, while you're actually touching another (tablet-like) device on the desk.

Of course. In the case of a proxy it makes sense. That´s like this http://10gui.com with a touchOver event. And in the future maybe also with force feedback.

Peace, andreaskern.

@andreaskern Bingo ;-)